The Whole Picture: Functional Requirements Are Important, But Not Sufficient in Selecting Software

If you are a business leader, are you frustrated with an IT organization that gives you all the reasons why your purchase of a software application won’t work versus figuring out how to make it work?

If you are an IT leader, do you find your concerns about application security, performance, compliance and a slew of other important requirements dismissed by business leaders as unnecessary roadblocks?

With business-led IT gaining traction and becoming an acceptable way of provisioning applications, the adversarial relationship reflected in the questions above will only intensify. But there is a solution.

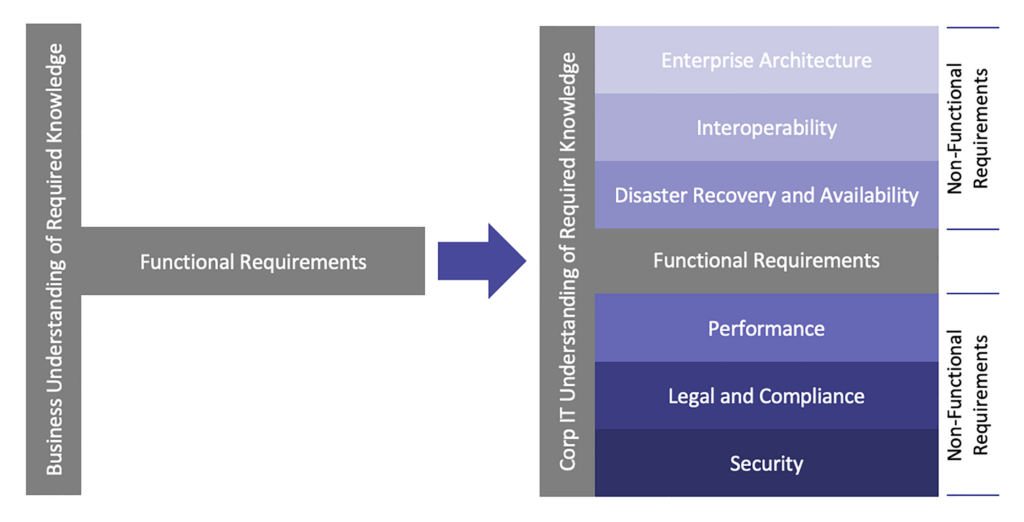

In our most recent white paper, “Guided Autonomy Governance Model: Resolving the Tension Between Corporate IT and Business-led IT,” we described the challenge of balancing corporate IT’s need for a stable and secure suite of applications with the business’ need for speed, agility and innovation. Often these two needs are in conflict. It is not uncommon for business-led software investments to focus on the functional needs of a new software application because that is what they know. However, they often miss the important aspects of what it takes to sustain a stable and secure computing environment (see Figure 1).

Although many business leaders know there are more factors to consider beyond defining the functional requirements of the software they wish to purchase and implement, they often lack the skills, time or patience to understand the importance of non-functional requirements (NFRs) and incorporate them into the software selection process. This failure to include NFRs upfront in the software selection process can create a significant business risk later when compliance, performance or security become a problem.

So, what exactly are NFRs and why are they important?

For many technology investments, NFRs typically include enterprise architecture, interoperability, disaster recovery and availability, performance, legal and compliance and security. Each of these are explained below:

- Enterprise Architecture – A truism in IT is that the greater the complexity of the computing environment (applications and infrastructure), the higher the risk of unplanned downtime and the greater the cost to support. Therefore, it is critical for corporate IT organizations to define and enforce a set of standards, policies and principles that apply to technology that is deployed anywhere within the company’s computing environment, whether plugged into the wide area network or stand alone. With all due respect to this truism, guided autonomy also recognizes that it is not possible or even prudent to mandate hard standards for every piece of technology brought into the company. Therefore, some measure of latitude makes sense.

- Interoperability – Most individual, workgroup, department and enterprise applications generally require data from, or provide data to, other applications within the company’s application portfolio. Even simple Excel spreadsheets need data fed from transactional systems. To support this data exchange requirement, several data sharing patterns can be used between applications. These patterns range from simple flat files exchanged through a secure FTP site, to published APIs that expose internal application functionality to other applications in a structured way, or pre-built connectors between commercial off-the-shelf applications. When purchasing or developing custom applications, consideration for the exchange of data and related patterns must be incorporated into vendor evaluation or the design of custom applications. Addressing the requirement for interoperability after the fact can be quite expensive.

- Disaster Recovery – Most software-as-a-service (SaaS), platform-as-a-service (PaaS) and infrastructure-as-a-Service (IaaS) offerings from cloud vendors operate in high availability environments that provide anywhere from 99.5% up to 99.95% uptime as a standard (i.e., non-negotiable) offering. Recovery-time objectives (acceptable downtime per incident) and recovery-point objectives (maximum data loss per incident) can also be negotiated with most third-party software and managed service vendors. These same DR considerations need to be addressed for custom developed applications based on business criticality, but offset by the cost to provide a high level of resilience and availability.

- Performance – Generally, most third-party applications have gone through extensive performance testing and tuning to ensure they can handle the volume of transactions that they are required to process. With the advent of cloud hosting solutions like AWS, Azure and GCP, hardware and storage capacity can be dynamically provisioned to accommodate most third-party applications, providing the ability to scale compute and storage up and down as needed. With custom-developed applications, the same performance testing and tuning needs to occur under normal and peak transactional demands. Otherwise, excessive response times will negatively impact the user experience. If these custom developed applications are customer-facing, poor application performance can detrimentally impact brand equity with the resulting decline in revenue.

- Legal – Both business-led IT and corporate IT teams should seek legal advice when negotiating any medium to large technology purchase. Although the preferred approach is to engage qualified legal counsel, sometimes corporations desiring to streamline the legal review process will ask an experienced attorney to provide a short list of legal “must haves.” This list informs business and IT leaders involved in contract negotiations on what to expect and what to ensure is in the final agreement. In almost all cases, vendors have much more experience with contract negotiations than their customers. This imbalance of experience and knowledge puts a company at a disadvantage relative to understanding the give and take surrounding legal terms and conditions. This advantage also extends to commercial terms (i.e., pricing) as business leaders are not always familiar with industry discounts offered at quarter or year-end closing. Money is inevitably “left on the table.”

- Compliance – While the Security and Legal NFRs focus on the importance of compliance with statutory and trade group requirements, regulatory compliance is also an important consideration. For public companies, this includes Sarbanes-Oxley compliance in the form of IT General Controls for the management of financial information. Customers may also place obligations on companies that process their proprietary or legally-protected information in the form of SSAE 18 SOC 2 Type II or ISO 27001 audits. Failure to consider the impact of these compliance regimens on purchased or custom-developed applications, companies that pursue business-led IT can expose them to fines, censorship and litigation.

- Security – Most businesses have proprietary information that they want to keep private and out of public view for competitive reasons. Many businesses also manage legally-protected customer personally identifiable information (PII) and patient health information (PHI) that carry certain obligations. These legal obligations (e.g., Europe’s GDPR, U.S. HIPAA and California’s CCPA) extend to how, when and where PII and PHI can be used with the intention of preventing abusive business practices that exploit this information for the benefit of the data holder and not the customer or patient. Outside of legal obligations, industry consortiums like the Payment Card Industry (PCI) stipulate requirements for capturing, processing, transmitting and storing credit card data (PCI DSS 4.0). Failure to abide by these requirements can result in fines and censure (loss of merchant abilities to process credit card transactions). Software applications that are either purchased or custom-developed must comply with well-documented information security practices to ensure these proprietary, legal and industry trade group obligations are met.

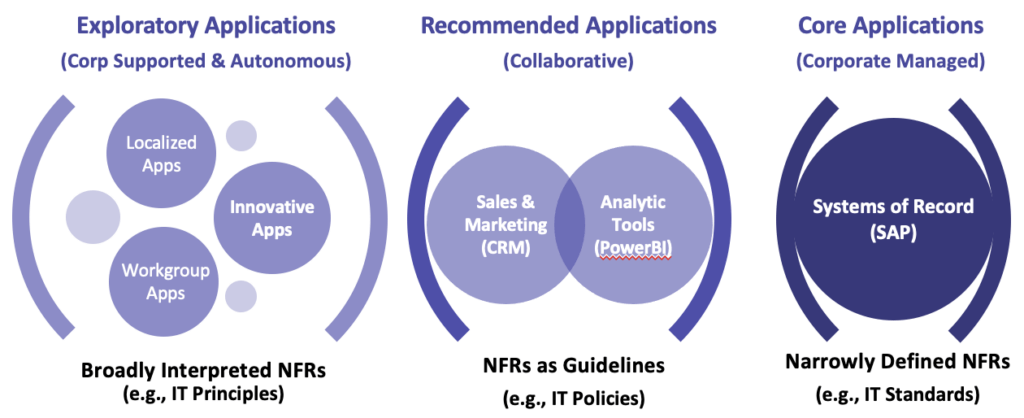

Cimphoni has developed the guided autonomy approach to manage the tension between corporate IT functions and business-led IT that optimizes the business need for speed and agility with the risks inherent in not addressing NFRs. This approach allows for deviation from standards, namely in the form of technology policies and principles. These policies and principles recognize the need to balance complexity against the business value of the application.

What does this mean? As an example, functionally robust applications, like ERP platforms, used across the enterprise with multiple integration points need to comply with IT standards (e.g., specific server operating system, database management system, application development language, etc.). Conversely, applications used at the workgroup level can get by with less restrictive policies. Policies may not stipulate an integration pattern but may suggest several patterns that would work. These policies may not require specific vendor hardware and software, but would provide a list of acceptable vendors. Principles are even broader in their application and generally used for applications used by individuals. They would suggest implementation of minimal security controls (e.g., two-factor authentication), but not provide a list of specific vendors to use.

The guided autonomy framework shown in Figure 2 describes the NFRs placed on software applications based on the four classes of applications discussed in the white paper: Corporate Managed, Collaborative, Corporate Supported and Autonomous. The governance model is designed to create agreement between corporate IT and business-led IT on the NFRs and how they can be applied to balance benefit and risk.

To learn more about Cimphoni’s Guided Autonomy Governance Model and how it can benefit your organization, download the white paper today, or contact us to discuss how we can help you improve the success of projects that balances the needs of all constituents in software selection, implementation and support.